The challenge with AI inferencing lies in the massive computational demands and the need for lightning-fast data access. Modern AI models, particularly large language models and computer vision systems, require enormous amounts of data to be processed simultaneously while maintaining ultra-low latency. This is where storage infrastructure becomes critical to AI success.

The Storage Bottleneck in AI Inferencing

Traditional storage systems often become the bottleneck in AI inferencing pipelines. When AI models need to access large datasets, images, or model weights stored on disk, any delay in data retrieval directly impacts inference speed. This latency can mean the difference between a successful real-time application and one that fails to meet performance requirements.

AI inferencing workloads typically require:

- High bandwidth to handle massive data throughput

- Ultra-low latency for real-time decision making

- Consistent performance under varying workload conditions

- Scalable storage that grows with AI model complexity

Cloudian’s Innovation in AI Inferencing Infrastructure

Cloudian has positioned itself at the forefront of AI inferencing infrastructure by developing storage solutions specifically designed for the unique demands of artificial intelligence workloads. Understanding that AI inferencing requires more than just fast storage, Cloudian has focused on creating systems that eliminate storage bottlenecks entirely.

The company’s approach to AI inferencing centers on delivering the high bandwidth and low latency that modern AI applications demand. By optimizing storage architecture for AI workloads, Cloudian enables organizations to deploy AI inferencing at scale without compromising on performance.

The Cloudian-NVIDIA Partnership: Pioneering GPUDirect Technology

GPUDirect for object storage technology allows data to flow directly from Cloudian’s storage systems to NVIDIA GPUs, bypassing the CPU and system memory entirely. This direct data path eliminates traditional bottlenecks and dramatically reduces latency in AI inferencing pipelines.

How GPUDirect Transforms AI Inferencing Performance

The GPUDirect implementation developed through the Cloudian-NVIDIA partnership delivers several key advantages for AI inferencing:

- Reduced Latency: By eliminating CPU involvement in data transfers, GPUDirect cuts inference latency

- Increased Bandwidth: Direct GPU-to-storage communication enables much higher data throughput, allowing AI models to process larger datasets more quickly.

- Lower CPU Utilization: With the CPU no longer handling data transfers, more processing power is available for other critical tasks in the AI inferencing pipeline.

- Improved Scalability: The direct connection architecture scales more efficiently as organizations add more GPUs and storage capacity.

Real-World Impact on AI Inferencing Applications

The combination of Cloudian’s storage expertise and NVIDIA’s GPU technology through NVIDIA GPUDirect has enabled breakthrough performance in several AI inferencing scenarios:

- Autonomous Vehicle Processing: Self-driving cars require split-second decision making based on camera and sensor data. The high bandwidth and low latency delivered by NVIDIA GPUDirect technology enables real-time processing of multiple video streams simultaneously.

- Medical Image Analysis: AI-powered diagnostic tools can now analyze CT scans, MRIs, and X-rays with dramatically reduced processing time, enabling faster patient care and more efficient healthcare workflows.

- Financial Fraud Detection: Real-time transaction monitoring systems can process thousands of transactions per second while maintaining the low latency needed to block fraudulent activities before they complete.

- Edge AI Deployment: The efficiency gains from GPUDirect technology make it possible to deploy sophisticated AI inferencing capabilities at edge locations where power and cooling are limited.

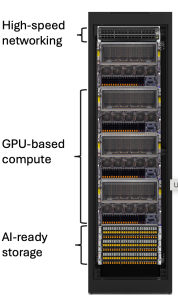

Technical Architecture: How NVIDIA GPUDirect Enables Superior AI Inferencing

The technical implementation of GPUDirect in Cloudian’s systems represents a fundamental shift in storage architecture for AI inferencing. Traditional systems require data to travel from storage through the CPU and system memory before reaching the GPU. This multi-hop journey introduces latency and consumes valuable CPU cycles.

NVIDIA GPUDirect creates a direct Remote Direct Memory Access (RDMA) pathway between Cloudian storage and NVIDIA GPUs. When an AI model needs data for inferencing, Cloudian’s peer-to-peer architecture enables multiple storage nodes to simultaneously transfer data directly to GPU memory using the S3 API. This approach leverages Cloudian’s distributed design, which was purpose-built for parallel processing, allowing the system to scale data transfer bandwidth linearly with the number of storage nodes while completely bypassing traditional controller limitations.

The S3 RDMA implementation ensures that data flows from storage to GPU memory with minimal latency and maximum parallelism. Unlike centralized storage architectures that create bottlenecks at the controller level, Cloudian’s distributed peer-to-peer design means that each storage node can independently serve data directly to GPUs, enabling true parallel data delivery that scales with both storage capacity and GPU count.

Optimizing AI Inferencing with Cloudian Solutions

Beyond the GPUDirect partnership with NVIDIA, Cloudian has developed additional optimizations specifically for AI inferencing workloads:

- Parallel Data Distribution: Cloudian’s peer-to-peer architecture automatically distributes AI model data across multiple storage nodes, enabling parallel RDMA transfers that scale with system size.

- Intelligent Data Placement: Cloudian’s systems can automatically identify frequently accessed AI model data and position it optimally across the distributed storage nodes for maximum parallel access during inferencing operations.

- Controller-Free Scaling: Unlike traditional storage systems, Cloudian’s distributed architecture eliminates controller bottlenecks, allowing organizations to scale AI inferencing performance by simply adding more storage nodes that can all participate in parallel RDMA transfers.

- Multi-Tier Storage Integration: Cloudian solutions seamlessly integrate with various storage tiers while maintaining the parallel access capabilities, ensuring that AI inferencing workloads always access data from the most appropriate performance tier without sacrificing parallelism.

- S3 API Compatibility: As an S3-native implementation, Cloudian ensures full compatibility with the AWS S3 API.

- No Kernel-level Modifications: Unlike some GPUDirect for file implementations, Cloudian requires no vendor-led kernel-level modifications, this eliminating that security exposure.

The Future of AI Inferencing Infrastructure

As AI models continue to grow in complexity and organizations deploy more sophisticated AI inferencing applications, the importance of optimized storage infrastructure will only increase. The partnership between Cloudian and NVIDIA through GPUDirect technology represents just the beginning of what’s possible when storage and compute are purpose-built for AI workloads.

Looking ahead, we can expect to see continued innovation in AI inferencing infrastructure, with technologies like GPUDirect serving as the foundation for even more advanced optimizations. Organizations investing in AI inferencing today should prioritize infrastructure partners who understand these unique requirements and have proven track records of innovation.

Conclusion: Enabling Next-Generation AI Inferencing

AI inferencing success depends heavily on the underlying infrastructure’s ability to deliver data at the speed of thought. Cloudian’s partnership with NVIDIA to develop GPUDirect for object storage technology demonstrates how purpose-built solutions can eliminate traditional bottlenecks and unlock new possibilities for real-time AI applications. As artificial intelligence becomes increasingly central to business operations across industries, the storage infrastructure powering AI inferencing will continue to play a central role in accelerating workflows and boosting ROI.

Learn more at cloudian.com

Or, sign up for a free trial