Download the Reference Architecture document here.

What You’ll Learn from This Document

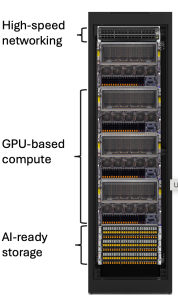

This comprehensive reference architecture outlines how Cloudian’s HyperStore object storage platform can be scaled to support AI model training and inference workloads on Supermicro servers with NVIDIA GPUs. The document explains how this solution delivers both the high performance and massive scalability required for demanding AI applications at a lower cost than traditional file storage options.

Key insights from the document include:

- GPUDirect Storage Integration – How Cloudian leverages NVIDIA’s GPUDirect Storage technology to enable direct data transfers between storage and GPU memory using RDMA, dramatically improving performance by bypassing CPU bottlenecks.

- AI Workload Optimization – Detailed explanations of how the platform supports various AI/ML pipeline stages from data ingestion to model training and inferencing.

- Scalable Architecture – Performance testing results that show how the solution scales from supporting 32 GPU nodes (256 GPUs) to over 2,000 GPU nodes (16,000+ GPUs).

- Hardware Specifications – Complete server configurations using Supermicro AS-2115HS-TNR storage servers with Micron 6500 ION NVMe SSDs for optimal performance.

- Network Architecture – Details on NVIDIA Spectrum-X networking configuration to ensure optimal data throughput for AI workloads.

The document effectively demonstrates how organizations can achieve enterprise-grade storage features such as centralized data management, data security, multi-tenancy, metadata management, and cloud adjacency while maintaining the high performance needed for GPU-accelerated workloads.

Why This Matters for Your AI Infrastructure

Traditional approaches to AI storage often involve expensive, specialized file systems or complex workflows where data must be copied from capacity-optimized storage to performance-optimized storage. This reference architecture shows how a single object storage platform can eliminate an entire storage tier, simplify data management, and reduce costs while still delivering the performance needed for even the most demanding AI workloads.

Download the Reference Architecture Today

If you’re planning or optimizing an AI infrastructure deployment, this document provides valuable insights into creating a more efficient and cost-effective data architecture. Download the complete “Object Storage data platform reference architecture for NVIDIA GPU platforms from Supermicro” today to learn how you can transform your AI data infrastructure and ensure your GPUs are always fed with the data they need at the speed they demand.