Artificial intelligence (AI) and machine learning (ML) are driving unprecedented innovation across industries. However, the exponential growth of data required for these technologies places immense demands on storage infrastructure. Cloudian HyperStore with NVIDIA GPUDirect for Object Storage offers a groundbreaking solution. Get breakthrough performance of 35GB/s per node in a cost-effective, exabyte scalable object storage platform.

AI and ML environments require vast volumes of data for training and processing. Emerging data types, such as synthetic data, high-resolution imagery, scientific datasets, and IoT generated data demand new levels of storage scale and performance. Cloudian HyperStore, integrated with NVIDIA GPUDirect, delivers an exabyte scalable, highly concurrent, and high-performance object storage solution, optimized to efficiently manage these diverse and data-intensive workloads.

Eliminates Data Migrations

Cloudian HyperStore with GPUDirect serves as a central storage system for multiple processes throughout the AI workflow. It acts as a unified data lake, facilitating direct access to data for AI training and inference without the need for costly and time-consuming data migrations.

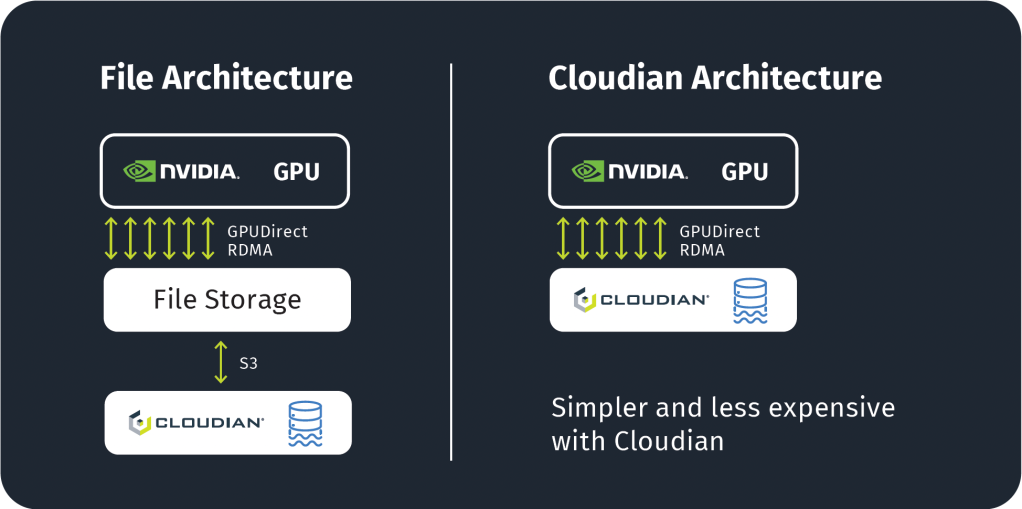

Reduces Infrastructure Costs

By eliminating the need for a separate high-performance fi le storage layer, Cloudian HyperStore with GPUDirect significantly reduces infrastructure costs for AI projects. Organizations can streamline their storage architecture, reducing both capital and operational expenses while improving overall system efficiency.

High-Performance for Full GPU Utilization

Cloudian HyperStore with GPUDirect optimizes AI workloads by supporting RDMA data transfers. Direct communication between GPUs and storage nodes ensures consistent and scalable performance, allowing for full GPU utilization and reduced CPU power consumption. Cloudian’s parallel processing architecture maximizes performance by enabling multiple concurrent data streams.

No Kernel-Level Modifications

Unlike file platforms, Cloudian’s GPUDirect for Object requires no vendor-driven kernel level modifications, eliminating the potential vulnerabilities associated with kernel changes. By eliminating the need for such alterations, it simplifies system administration, decreases attack surfaces, and lowers the risk of security breaches.

Streamlining Data Management

Effective data management is crucial for successful AI applications. Cloudian HyperStore offers rich object metadata, object versioning, and object tags, allowing organizations to organize and catalog their data efficiently. The multi-tenancy feature enables multiple data scientists or teams to work simultaneously with the same data, fostering collaboration and accelerating AI workflows.

Choosing the right storage platform is paramount for the success of AI systems. Cloudian HyperStore with GPUDirect for Object Storage emerges as a game-changing solution, providing a scalable, aff ordable, and high-performance data storage foundation. By simplifying AI workflows, reducing costs, and optimizing GPU utilization, Cloudian empowers organizations to unlock the full potential of their AI initiatives and drive innovation at unprecedented speeds.

BENEFITS

- 35GB/s read throughput per node

- Consolidates storage with exabyte scalability

- Accelerates AI model training and inference

- Reduces cost by replacing costly file layer

- Eliminates data migrations across data infrastructure

- Optimizes GPU Utilization

- No kernel-level modifications

- Native S3 API compatibility with all popular ML frameworks

- Protects valuable AI data assets