Request a Demo

Join a 30 minute demo with a Cloudian expert.

NVIDIA Magnum IO GPUDirect Storage (GDS) is a technology that enhances data transfer rates between GPUs and storage systems. It leverages RDMA (Remote Direct Memory Access) for speeding up I/O operations by enabling direct access to GPU memory and storage. This minimizes latency and increases throughput, which benefits applications that are data-intensive and require high-speed data transfer.

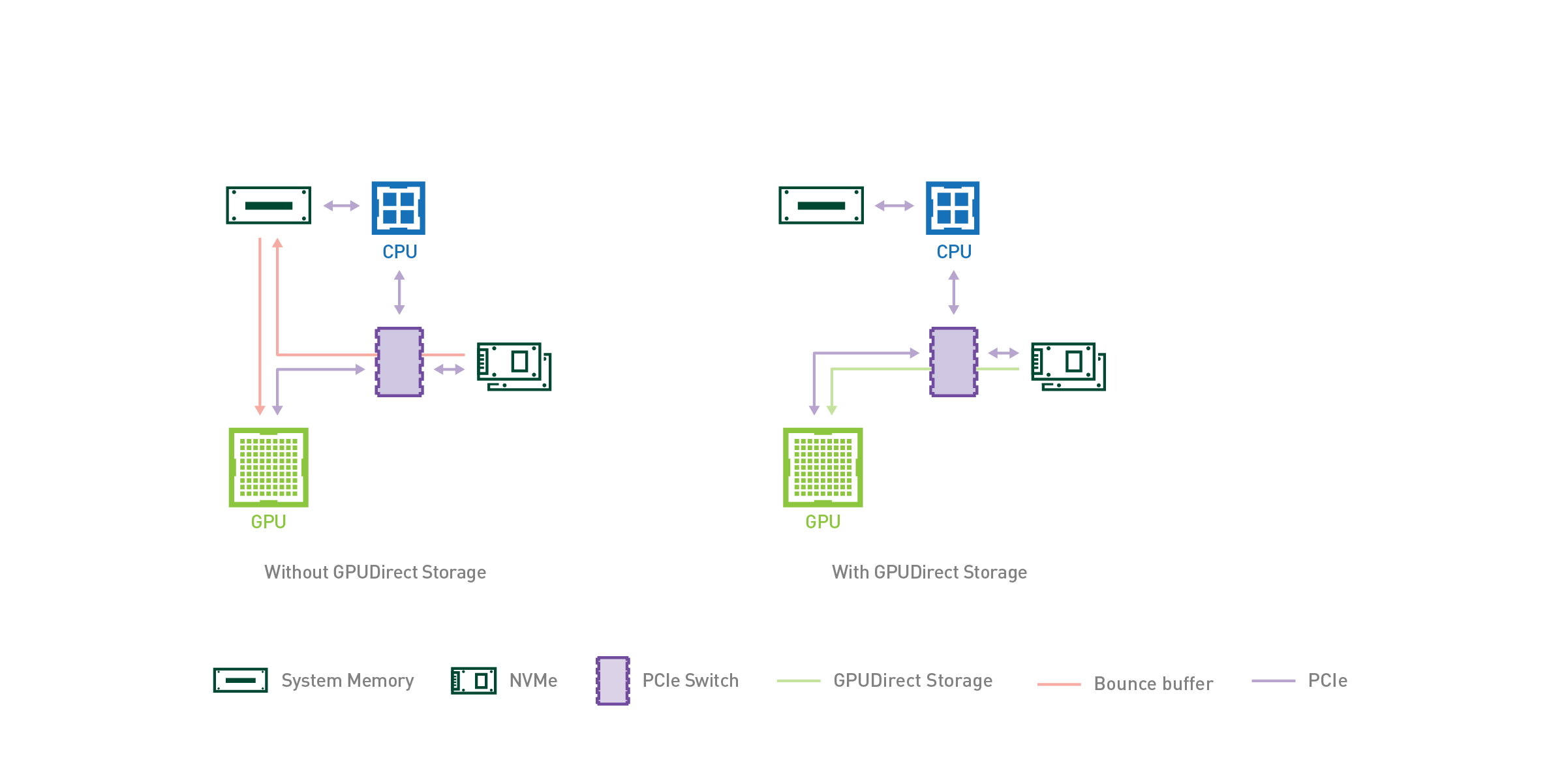

By bypassing the CPU, GDS allows storage data to move directly into GPU memory, reducing the latency associated with data movement. This technology is useful in environments like high-performance computing (HPC), artificial intelligence (AI), and machine learning (ML), where efficient data handling is crucial.

This is part of a series of articles about data lake technology.

In this article:

The NVIDIA GPUDirect framework provides the following key capabilities:

Remote direct memory access (RDMA) capabilities enable data transfer over a network without traversing the CPU. This feature minimizes latency and maximizes throughput by allowing data to be moved directly between storage and compute. In GPUDirect, RDMA helps in achieving high-speed data transfer from storage to GPUs.

RDMA reduces the communication overhead and accelerates the performance of applications that rely on fast data transfers across networked systems.

NVIDIA kernel extensions and drivers are crucial components of GPUDirect Storage. These drivers enable the DMA and RDMA functionalities by providing the necessary software support. They ensure seamless data transfer between storage and GPU memory while maintaining system stability.

These extensions are optimized for various operating systems and hardware configurations. They integrate closely with existing storage solutions to provide a reliable data transfer mechanism that enhances overall system performance.

Coherent memory access ensures that data can be consistently accessed across different memory regions without inconsistencies. This feature is vital for applications that require synchronized data access among multiple GPUs or between GPUs and CPUs. GPUDirect leverages coherent memory access to maintain data consistency and integrity.

By ensuring data coherence, GPUDirect Storage minimizes synchronization issues and supports real-time processing requirements. This feature is crucial for applications in AI and ML that depend on timely and accurate data handling.

GPUDirect Storage functions by establishing a direct data path between the storage system and GPU memory, bypassing the CPU. This process is facilitated by the DMA engine, which allows data to be transferred directly between the storage and GPU memory without CPU involvement. The storage I/O requests are issued directly by the GPU, enabling faster and more efficient data handling.

The workflow typically involves the following steps:

This streamlined approach minimizes latency and maximizes throughput, allowing data-intensive applications to perform more efficiently. The RDMA capabilities further enhance this by enabling direct data transfers over a network, ensuring high-speed communication between nodes in a distributed computing environment.

GPUDirect Storage and GPUDirect RDMA are both technologies developed by NVIDIA to enhance data transfer efficiency between GPUs and other system components.

GPUDirect Storage focuses on optimizing the data transfer path between GPU memory and storage systems. It allows GPUs to access data directly from storage without CPU intervention, significantly reducing latency and increasing throughput. This is beneficial for applications involving large datasets and requiring high-speed data access, such as AI, ML, and HPC workloads.

GPUDirect RDMA (remote direct memory access) is designed to facilitate high-speed data transfers between GPUs and other nodes in a network. By enabling direct memory access between GPUs over a network, GPUDirect RDMA eliminates the need for CPU involvement in data movement. This minimizes communication overhead and enhances performance in distributed computing environments.

In summary, while GPUDirect Storage streamlines data transfer between storage systems and GPU memory, GPUDirect RDMA optimizes network-based data transfers between GPUs across different systems. Both technologies aim to reduce latency and increase throughput, but they are applied in different scenarios depending on the specific data transfer requirements.

Jon Toor, CMO

With over 20 years of storage industry experience in a variety of companies including Xsigo Systems and OnStor, and with an MBA in Mechanical Engineering, Jon Toor is an expert and innovator in the ever growing storage space.

Leverage multi-threading with GDS: Ensure your application is designed to use multiple threads to handle I/O operations. This can help in fully utilizing the high throughput provided by GPUDirect Storage.

Optimize GPU memory allocation: Properly managing GPU memory allocation and deallocation can prevent fragmentation and ensure maximum availability of memory for GDS operations.

Use high-performance storage solutions: Pair GDS with high-performance storage systems like NVMe SSDs or NVMe-over-Fabrics to fully exploit the low-latency, high-throughput capabilities of the technology.

Profile and monitor I/O performance: Use profiling tools to monitor and analyze I/O performance regularly. Identifying bottlenecks early can help in optimizing the data transfer paths and improving overall efficiency.

Adopt a tiered storage approach: Implement a tiered storage strategy where frequently accessed data is kept on faster storage tiers, while less frequently accessed data resides on slower tiers. This can balance performance and cost.

GPUDirect Storage is widely used in various fields that demand high-speed data access and processing. This section explores some common use cases.

In HPC environments, fast data transfer is essential for achieving high computational performance. GPUDirect Storage enables direct data movement between storage and GPUs, which is crucial for applications that handle large datasets and require quick processing.

By reducing latency and increasing data throughput, GPUDirect Storage aids in achieving higher efficiency and quicker results in HPC tasks. This is beneficial in scientific research and simulations, where massive amounts of data are processed routinely.

AI and ML applications involve training models on large datasets, requiring efficient data handling. GPUDirect Storage accelerates data movement directly into GPU memory, reducing the time spent on data transfer and increasing the time available for computation.

This leads to faster training times and more efficient model development. By leveraging GPUDirect Storage, AI and ML frameworks can achieve higher performance and scalability, which are critical for complex model training and inference tasks.

Real-time data processing and analytics require quick data access and minimal latency. GPUDirect Storage’s ability to transfer data directly from storage to GPU memory without CPU involvement makes it ideal for such applications.

This capability ensures that data is available almost instantaneously for processing, enabling real-time analysis and decision-making. Industries like finance and telecommunications, where real-time analytics are pivotal, benefit significantly from GPUDirect Storage.

Scientific simulations and data visualization processes often deal with large datasets and require high-speed data transfers. GPUDirect Storage facilitates these needs by enabling direct data access to GPU memory, bypassing conventional bottlenecks.

This results in faster data rendering and more efficient simulation runs. Researchers can thereby analyze and visualize data more quickly, enabling more thorough and timely scientific investigations. GPUDirect Storage thus enhances both the speed and quality of scientific analysis and visualizations.

Cloudian was the first to announce support for GPUDirect for Object Storage. Cloudian HyperStore stands out as a storage solution specifically tailored for AI systems, offering scalable, cost-effective, and resilient object storage that meets the unique requirements of AI and ML workloads. It provides a solid foundation for both stream and batch AI pipelines, ensuring efficient management and processing of large volumes of unstructured data. With options for geo-distribution, organizations can deploy Cloudian systems as needed, choosing between all flash and HDD-based configurations to match the performance demands of their specific workload.

Today, the platform’s compatibility with popular ML frameworks such as TensorFlow, PyTorch, and Spark ML streamlines the AI workload optimization process. These frameworks are optimized for parallel training directly from object storage, enhancing performance and compatibility.

Cloudian HyperStore simplifies data management with features like rich object metadata, versioning, and tags, and fosters collaboration through multi-tenancy and HyperSearch capabilities, accelerating AI workflows. Moreover, its compatibility with multiple types of stores—feature stores, vector databases, and model stores—empowers organizations to manage, search, and utilize their data effectively, ensuring a robust and efficient AI data infrastructure.