CIO recently published an interesting article exploring why Seagate (a major Cloudian partner) repatriated their big data workloads from the public cloud back to their own private cloud. In short, Seagate realized that with the massive amounts of data it was generating, the cloud storage costs plus the bandwidth costs associated with moving data to and from the public cloud made it far too expensive. As a result, the company decided to bring these workloads back to its own data center, resulting in a 25% cost reduction.

CIO recently published an interesting article exploring why Seagate (a major Cloudian partner) repatriated their big data workloads from the public cloud back to their own private cloud. In short, Seagate realized that with the massive amounts of data it was generating, the cloud storage costs plus the bandwidth costs associated with moving data to and from the public cloud made it far too expensive. As a result, the company decided to bring these workloads back to its own data center, resulting in a 25% cost reduction.

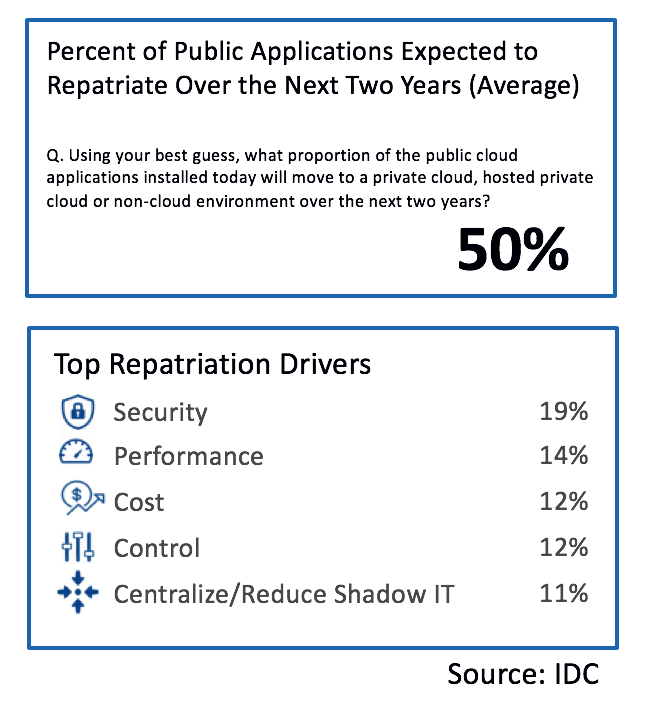

When it comes to repatriation, Seagate is not alone. According to IDC, 85 percent of IT managers say they’re moving some portion of their workloads back from public cloud environments.

So what’s driving this trend? There are several main factors.

Cost

First, there’s a general lack of visibility into public cloud costs. Many clouds employ a complex pricing strategy, with multiple types of charges and thresholds that add charges at certain levels. To make it more confusing, each major public cloud platform uses different pricing models, making comparison shopping and cost prediction highly challenging. We’ve heard numerous horror stories of CIOs who were shocked when they were suddenly hit with enormous monthly public cloud bills.

The three key variables are:

- How much data are you storing?

- How frequently do you access it? Egress charges average 15-20% of the bill but can be a lot more depending on the use case.

- How much WAN bandwidth is required? A quick rule of thumb: Accessing 1 TB of data from the cloud over a 1G link requires 3 hours. To achieve the needed access performance, you may require a costly WAN upgrade.

Security and Control of Data

Security and the need to control one’s own data is another major driver of repatriation. Organizations can only have full control over their data when it’s deployed in a private cloud or on-premises environment.

There are a number of misconceptions about how security works in the public cloud. Many organizations believe that cloud providers simply take care of most of it, but in reality, the customer needs to take a much more active role. Because public cloud environments are so different from on-prem settings, there’s confusion about what exactly needs to be done to secure a public cloud deployment. For example, with public cloud storage bucket services, it’s very common for users to improperly configure cloud instances that contain sensitive corporate and personal information.

Performance

Public cloud performance (i.e., data transfer time to and from the cloud) is highly variable, as it depends on the available WAN bandwidth and the cloud provider’s overall workload at a given time. When large volumes of data are involved, the latency in accessing data from a public cloud can be significant, thereby negatively impacting business operations. In fact, we’ve seen customers move away from public cloud simply because their use case could not tolerate the performance latency and variability they experienced.

Vendor lock-in

Organizations can easily become overly dependent on a given cloud provider. If your entire system was developed using tools from a single cloud vendor, it may be difficult to switch to a new vendor, and your options are limited if the provider starts charging more for the same services. The data egress fees mentioned earlier are another way cloud providers try to discourage customers from leaving.

The Best of Both Worlds

Of course, the public cloud serves many important uses. Due to its ability to scale up and down on-demand, the public cloud is great for applications with highly elastic compute requirements. Consider an e-commerce app that experiences massive spikes of traffic at certain times of the year, after which traffic levels go back down. In addition, the cloud can be an effective and cost-efficient option for backup and disaster recovery.

Cloudian supports a hybrid approach that provides a scalable and simple way for organizations to store the bulk of their data on-premises or in a private cloud, then easily expand deployments to any of the major public clouds when circumstances call for it. Because Cloudian object storage is fully S3-compatible, it provides seamless integration between on-prem and public cloud environments, making it easy for customers to move data back and forth. In addition, we give users a single view of data across distributed environments, avoiding the siloed management required with traditional storage offerings. Finally, our new HyperIQ observability and analytics solution provides continuous monitoring and insight of Cloudian and related infrastructure, encompassing interconnected users, applications, network connections and storage devices─all from a single interface.

Visit these resources to learn more about how Cloudian supports cloud repatriation and hybrid deployments.