Request a Demo

Join a 30 minute demo with a Cloudian expert.

Data lake storage solutions are systems for storing vast amounts of structured, semi-structured, and unstructured data. Unlike traditional storage systems, these solutions allow organizations to collect and retain raw data in its native format. Data lakes are suitable for use cases such as advanced analytics, machine learning, and big data processing, where flexibility in data storage and retrieval is critical.

The main idea behind data lake storage is to create a centralized repository for all types of data. They are built to handle immense scalability requirements and allow users to fetch and analyze data on demand without predefined schemas. This agility makes them instrumental for organizations dealing with rapidly growing data volumes and evolving analytical needs.

In this article:

Data lake storage solutions are architected for massive scalability, which is essential for managing modern data volumes generated by IoT devices, user interactions, logs, and transactional systems. These systems typically use distributed file systems, like HDFS or object stores such as Amazon S3 or Azure Data Lake Storage, which allow data to be stored across multiple nodes.

Elasticity is another critical capability, enabling storage resources to expand or contract automatically based on current usage patterns. This elasticity helps organizations avoid over-provisioning and under-utilization of resources. It also supports high availability and fault tolerance, ensuring data is not lost even if individual nodes fail.

Data lakes are format-agnostic, meaning they can store virtually any type of data without modification. This includes:

This flexibility eliminates the need for upfront transformation or standardization, enabling faster data ingestion from varied sources like APIs, sensors, clickstreams, and enterprise applications. It also supports a more holistic data analysis process, where analysts can correlate structured records with unstructured content like support tickets or product reviews.

The schema-on-read model defers data modeling until query time. This means raw data can be ingested without needing to define tables or data types in advance. When users run queries, they apply schemas dynamically to interpret the data in the required format. This approach significantly increases agility and supports exploratory analysis.

Different teams can interpret the same dataset according to their needs. For example, a marketing team may look at user data to understand engagement patterns, while a data science team might apply a different schema to the same data to train a machine learning model. It also reduces time-to-insight, as analysts aren’t bottlenecked by rigid data definitions.

Data lake solutions provide APIs and connectors that integrate with a broad ecosystem of analytics tools, such as:

These integrations allow analysts and data scientists to process and analyze data directly in the lake without needing to replicate it to separate environments. This reduces data movement, minimizes latency, and improves the efficiency of analytics pipelines. Additionally, it enables interactive querying, batch processing, and real-time analytics from a single data source.

Without metadata, a data lake can quickly become a “data swamp”—a repository full of unorganized, unreadable data. Effective metadata management systems address this by capturing details about each dataset’s structure, source, format, and usage.

Data cataloging tools provide searchable interfaces for discovering available datasets, their schemas, and associated tags or business glossaries. They often include features like:

These features improve data discoverability and usability, enabling teams to find, understand, and use data while maintaining compliance with internal and external regulations.

Cloudian HyperStore is an on-premises, S3-compatible object storage platform purpose-built for scalable, cost-efficient data lake environments. It supports hybrid and private cloud deployments and enables analytics-in-place by integrating directly with platforms like Teradata, Vertica, and Microsoft SQL Server. HyperStore is designed to meet the performance, scalability, and data protection requirements of modern analytics workloads while minimizing total cost of ownership.

Key features include:

Dell PowerScale is a scale-out NAS solution to support AI, machine learning, and unstructured data workloads. It is part of Dell’s AI Data Platform, enabling data access and mobility across edge, core, and cloud environments. It helps maximize the use of GPU resources with parallelized data streams and a unified data lake architecture.

Key features include:

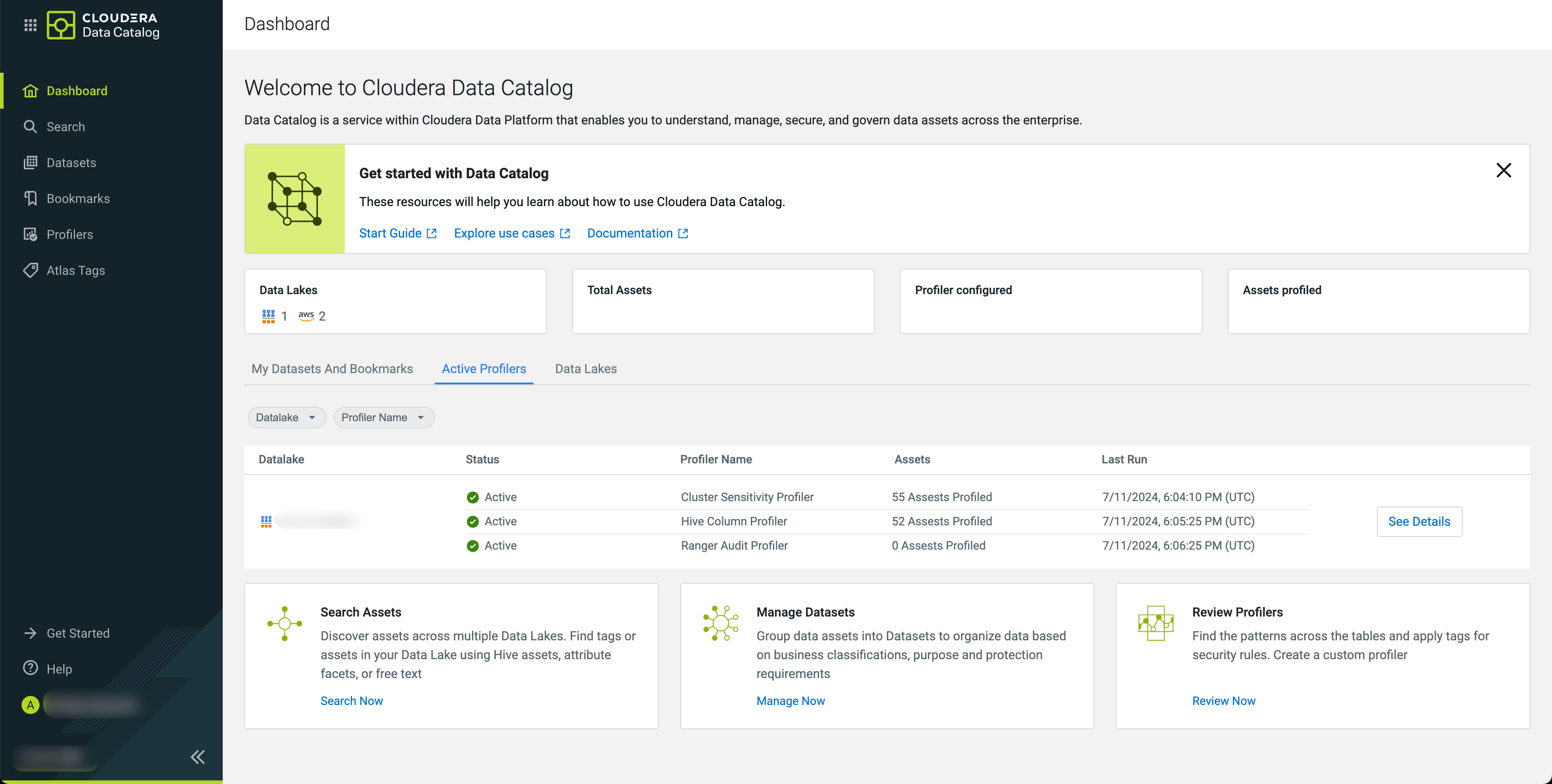

Cloudera Data Platform (CDP) provides a managed data lake service that emphasizes governance, metadata management, and secure access across hybrid and multi-cloud environments. It centralizes schema and metadata control using Cloudera Shared Data Experience (SDX), allowing organizations to apply consistent security and auditing policies.

Key features include:

VAST Data offers a scale-out storage platform that merges fast transactional processing with large-scale analytics. It unifies structured and unstructured data under a global namespace and enables querying without traditional bottlenecks such as caching or ETL pipelines. Its system architecture uses a columnar object format optimized for NVMe.

Key features include:

Apache Hudi is an open-source data lakehouse platform intended to bring database-like functionality to data lakes. It supports incremental data processing, allowing new data to be ingested and queried with low latency. Hudi provides ACID transaction guarantees, time travel capabilities, and schema evolution.

Key features include:

Data lake storage solutions have become essential for organizations seeking to manage and extract value from increasingly diverse and large-scale data. By enabling flexible, scalable, and secure storage of all data types, they support modern analytics, AI, and data science initiatives. Their ability to decouple storage from compute, apply schema-on-read, and integrate with a range of tools allows organizations to accelerate time-to-insight, adapt to changing requirements, and maintain governance across complex data landscapes.