The Answer for Big Data Storage

Big Data analytics delivers insights, and the bigger the dataset, the more fruitful the analyses. However, big data storage creates big challenges: cost, scalability, and data protection. To derive insight from information, you need affordable, highly-scalable storage that’s simple, reliable, and compatible with the tools you have.

What Is Big Data Storage?

Big data storage is a compute-and-storage architecture you can use to collect and manage huge-scale datasets and perform real-time data analyses. These analyses can then be used to generate intelligence from metadata.

Typically, big data storage is composed of hard disk drives due to the media’s lower cost. However, flash storage is gaining popularity due to its decreasing cost. When flash is used, systems can be built purely on flash media or can be built as hybrids of flash and disk storage.

Data within big data datasets is unstructured. To accommodate this, big data storage is usually built with object and file-based storage. These storage types are not restricted to specific capacities and typically volumes scale to terabyte or petabyte sizes.

Big Data Storage Challenges

When configuring and implementing big data storage there are a few common challenges you might encounter. All these challenges take different shape when running on the public cloud vs. on-premises storage.

| Challenge | Cloud vs. On-Premise |

|

Size and storage costs Big data grows geometrically, requiring substantial storage space. As data sources are added, these demands increase further and need to be accounted for. When implementing big data storage, you need to ensure that it is capable of scaling at the same rate as your data collection. |

Public cloud storage services like Amazon S3 offer simplicity and high durability. However, storage is priced per GB/month, with extra fees for data processing and network egress. Running big data on-premises delivers major cost savings because it eliminates these large, ongoing costs. |

|

Data transfer rates When you need to transfer large volumes of data, high transfer rates are key. In big data environments, data scientists must be able to move data quickly from primary sources to their analysis environment. |

Public cloud resources are often not well suited to this demand. On-premises, you can leverage fast LAN network connections, or even directly connect storage to the machines that store the data. |

|

Security Big data frequently contains sensitive data, such as personally identifiable information (PII) or financial data. This makes data a prime target for criminals and a liability if left unprotected. Even unintentional corruption of data can have significant consequences. |

To ensure your data is sufficiently protected, big data storage systems need to employ encryption and access control mechanisms. Systems also need to be capable of meeting any compliance requirements in place for your data. Generally, you’ll have greater control over data security on-premises or in private clouds than on public clouds. |

|

High availability Regardless of what resources are used, you need to ensure that data remains highly available. You should have measures in place to deal with infrastructure failures. You also need to ensure that you can reliably and efficiently retrieve archived data. |

Public clouds have strong support for this requirement. When running on-premises, ensure your big data storage solution supports clustering and replication of storage units, to provide redundancy and high durability on par with cloud storage services. |

Big Data Storage Key Considerations

When implementing big data storage solutions, there are several best practices to consider.

Define Requirements

Start by inventorying and categorizing your data. Take into account frequency of access, latency tolerance, and compliance restrictions.

Use Data Tiering

Use a storage solution that lets you move data to lower-cost data tiers if it needs lower durability, lower performance, or less frequent access

Disaster Recovery

Set policies for data backup and restoration, and ensure storage technology meets your Recovery Time Objective (RTO) and Recovery Point Objective (RPO).

Cloudian® HyperStore® and Splunk SmartStore reduce big data storage costs by 60% while increasing storage scalability. Together they provide an exabyte-scalable storage pool that is separate from your Splunk indexers.

With SmartStore, Splunk Indexers retain data only in hot buckets that contain newly indexed data. Older data resides in the warm buckets and is stored within the scalable and highly cost-effective Cloudian cluster.

Elasticsearch Backup and Data Protection

Elasticsearch, the leading open-source indexing, and search platform, is used by enterprises of all sizes to index, search, and analyze their data and gain valuable insights for making data-driven business decisions. Ensuring the durability of these valuable insights and accompanying data assets has become critical to enterprises for reasons ranging from compliance and archival to continued business success.

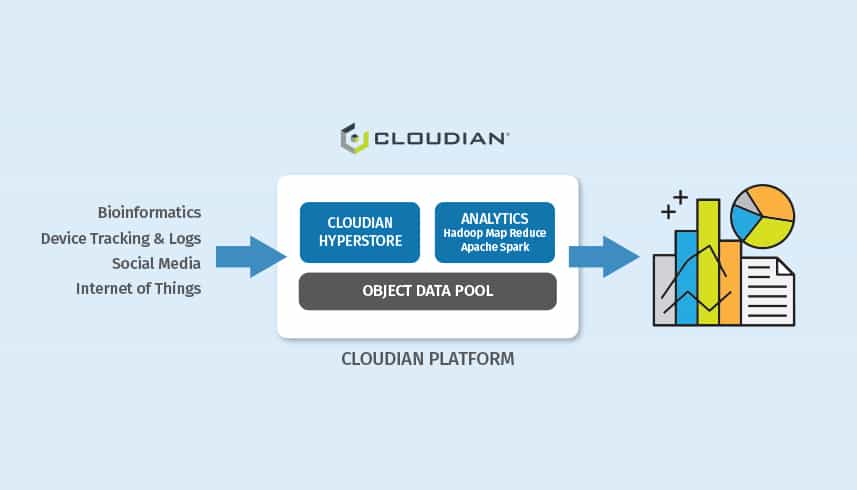

Optimize Your Big Data Analytics Environment for Performance, Scale, and Economics

Improve data insights, data management and data protection for more users with more data within a single platform

Combining Cloudera’s Enterprise Data Hub (EDH) with Cloudian’s limitlessly scalable object-based storage platform provides a complete end-to-end approach to store and access unlimited data with multiple frameworks.

Leading Swiss Financial Institution Deploys Cloudian Storage for Hadoop Big Data Archive

Benefits

- Certified by HortonWorks

- Scale compute resources independent of storage

- No minimum block size requirement

- Reduces big data storage footprint with erasure coding

- Increases performance with replicas that mimic HDFS

- Compress data on the backend without altering the format

- Enables data protection and collaboration with replication across sites