What Are AI Storage Providers?

AI storage providers offer infrastructure and solutions optimized for managing the massive volumes of data required for AI workloads. Unlike traditional storage systems, these solutions are designed to handle complex and varied data sets, such as structured data, unstructured data like images, videos, and audio, and semi-structured data like JSON or XML. They are engineered to support high-speed data access and scalability for AI training and inference.

Such providers focus on meeting the demanding requirements of AI workflows by enabling data availability, which is critical for processing large training models and real-time AI tasks. Many deploy advanced data management features such as replication, snapshots, and tiered storage to ensure data integrity and accessibility while reducing costs by optimizing resource usage.

This is part of a series of articles about AI infrastructure

In this article:

Key Requirements for AI Storage Solutions

High Throughput and Low Latency

AI workloads include training neural networks and serving AI in real time. AI models often process vast amounts of data in parallel across multiple GPUs or CPUs, requiring storage solutions that can deliver data at high rates without bottlenecks. A slow storage pipeline can lead to increased model training times, negatively affecting productivity and costs.

Low latency is just as important, especially for inference tasks where near-instantaneous responses are critical. For example, applications in autonomous vehicles or edge-based AI rely on real-time data reads and writes. Optimized input/output handling, parallel file systems, and NVMe storage technologies are often employed to meet these performance demands.

Linear Scalability to Exabytes

AI workloads already process massive amounts of data, and with the advent of AI reasoning, that volume is poised to grow exponentially. Storage systems must allow for flexible growth in both capacity and throughput to enable AI workflows to retain the historical data needed to accelerate and enhance reasoning over time.

Data Management Features

An AI storage system must include advanced data management functionalities to support the end-to-end AI lifecycle. Features like automated data tiering allow for the efficient allocation of data to the most cost-effective or performance-oriented storage tiers. This ensures that hot data is stored on high-speed media, while cold data is archived cost-effectively.

Additional capabilities like snapshotting, and mirroring or erasure coding increase data availability and protect against corruption. Backup and disaster recovery systems are equally important, to minimize downtime in case of storage failures. Such features help teams focus on advancing AI objectives rather than dealing with data logistics.

Support for Diverse Data Types

AI models require enormous and varied datasets, encompassing types such as text, images, video, and even sensor data. An effective AI storage provider must support these diverse data formats while ensuring seamless data availability for training and inference. Compatibility with various file systems, object storage protocols, and APIs is key to achieving this.

Multi-tier storage support for structured (databases), unstructured (media files), and semi-structured (logs) data ensures organizations can centralize data on a unified platform. Consolidating diverse data sources also simplifies workflows, cutting down on the need for format conversions or data preprocessing.

Integration with AI Frameworks

A competent AI storage platform integrates effortlessly with popular AI frameworks like TensorFlow, PyTorch, and scikit-learn. Direct integration minimizes the complexity of deploying data pipelines while improving efficiency. For example, native support for AI frameworks reduces time wasted in connecting incompatible technologies or creating custom data management layers.

Additionally, integration with containerization and orchestration technologies like Kubernetes or Docker allows teams to scale their AI solutions dynamically. This connection ensures that storage solutions seamlessly complement the evolving needs of AI environments, avoiding vendor lock-in and enabling system flexibility.

Notable AI Storage Providers

1. Cloudian

Cloudian HyperStore is an AI-ready object storage platform for large-scale, data-intensive AI workloads. Built to manage unstructured data at exabyte scale, it provides high-throughput, low-latency performance through a distributed architecture optimized for AI training and inference.

Key features:

- AI-ready performance: Achieves 200 GiB/s read throughput on a modest 6-node cluster (12U total). This object storage performance translates to nearly 35 GiB/s per storage node.

- Integration with AI ecosystems: Supports data-centric AI applications with a distributed architecture that allows thousands of concurrent operations and integrates with NVIDIA GPUDirect and works with leading ML frameworks like TensorFlow, PyTorch, and Spark ML, enabling direct parallel training from object storage.

- Scalable object storage: Offers limitless scalability through a modular, non-disruptive expansion model. Enables unified management across geographically distributed sites, supporting AI data growth without re-architecting infrastructure.

- S3 API compatibility: Delivers the industry’s highest level of compatibility with the AWS S3 API, ensuring seamless integration with cloud-native AI tools and services.

- Advanced data management: Offers rich metadata tagging, versioning, and HyperSearch for fast data discovery. Multi-tenancy and security features ensure secure, compliant storage for AI workloads.

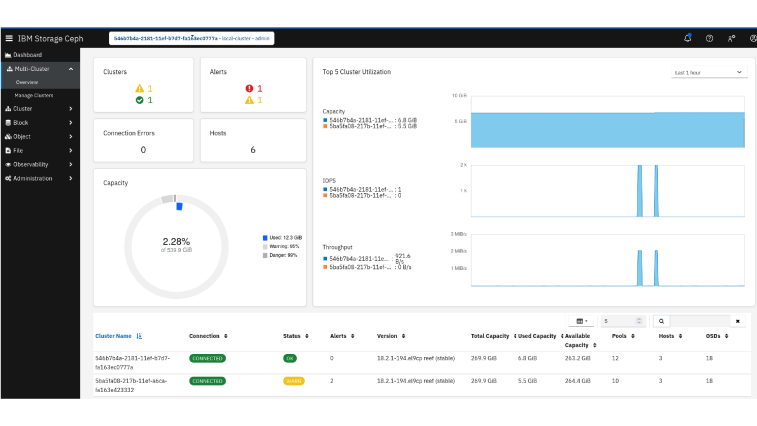

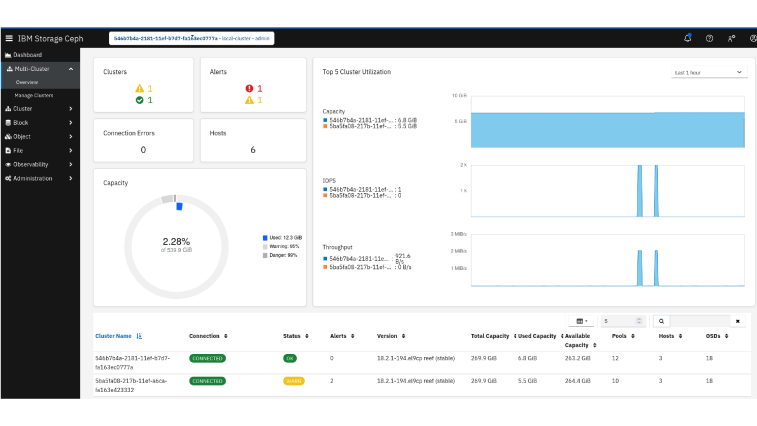

2. IBM

IBM offers a suite of AI storage solutions to support data-intensive workloads across AI, machine learning, and analytics environments. Its platform consolidates file, block, and object storage services, optimizing performance and scalability for cloud and on-premises deployments.

Key features:

- Unified storage architecture: Combines file, block, and object storage into a single platform for simplified data access and management.

- High performance and low latency: Delivers consistent data throughput to support AI training and inference workloads.

- Content-aware intelligence: Unlocks semantic meaning in unstructured data, improving the effectiveness of AI models and assistants.

- Software-defined infrastructure: Supports scale-out deployment for AI, machine learning, and high-performance computing.

- Deployment options: Operates across edge, on-premises, and cloud environments.

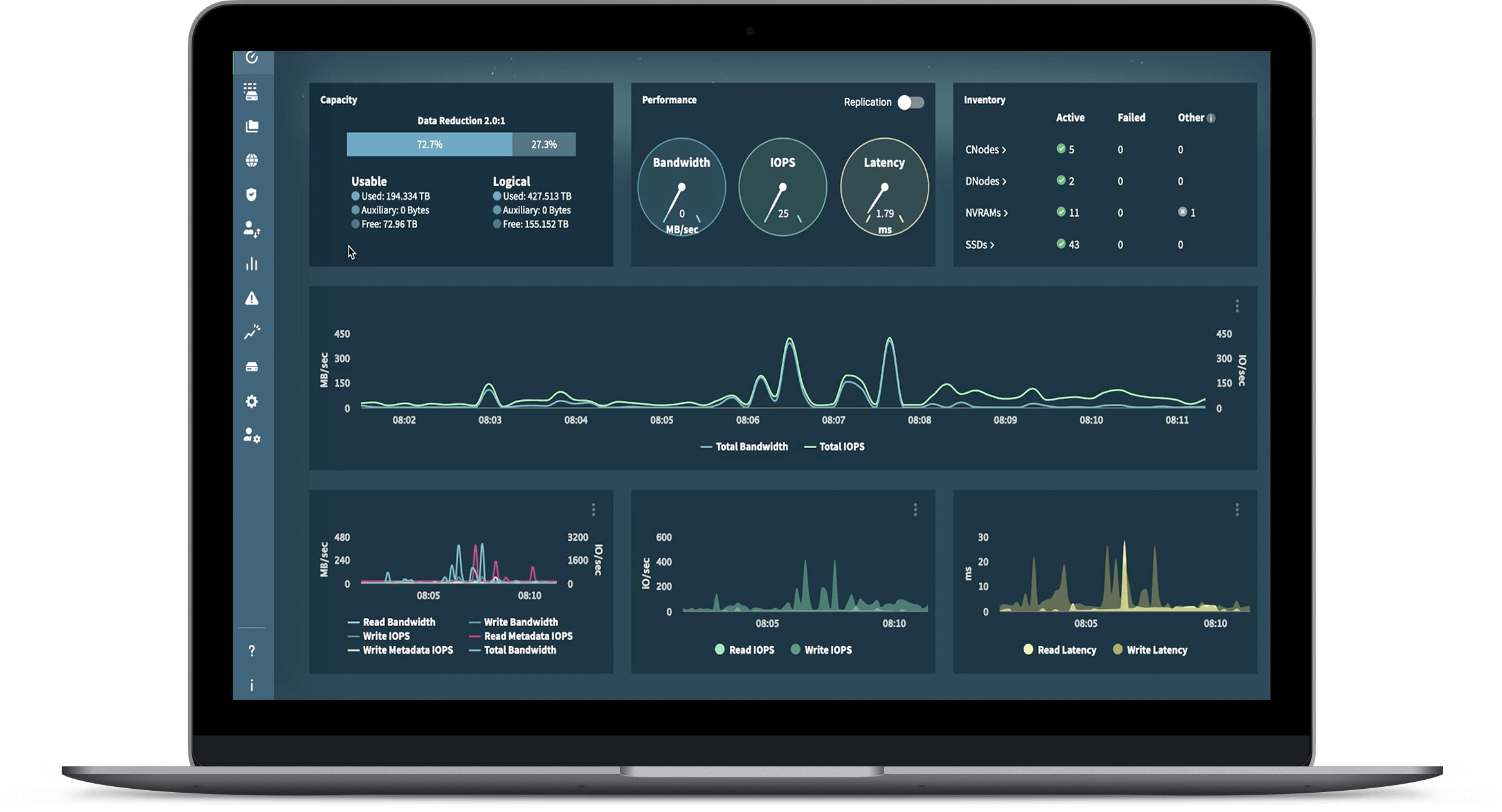

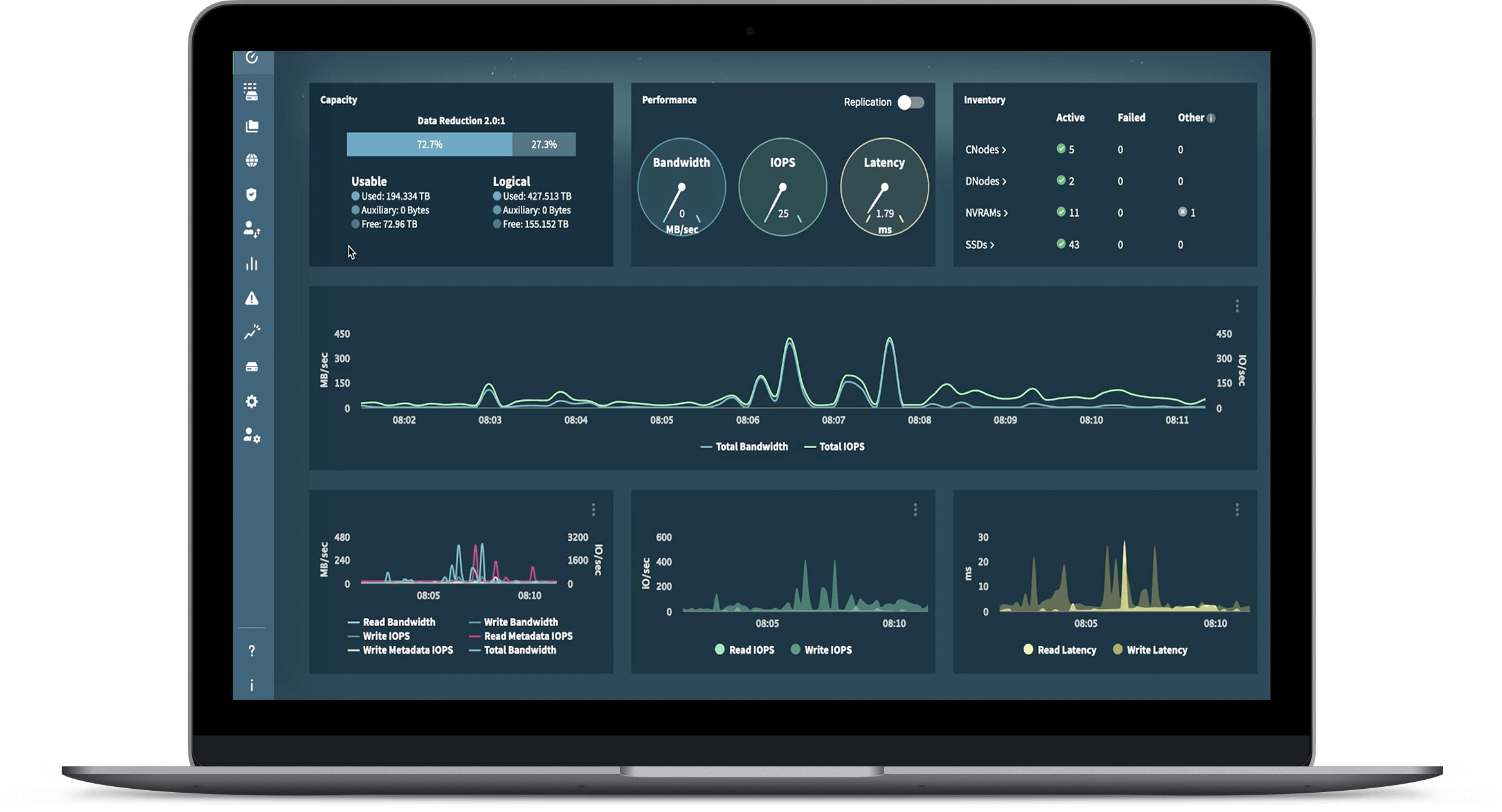

3. Pure Storage

Pure Storage delivers a scalable storage platform for AI workloads. Designed to maximize GPU efficiency, the platform supports massive-scale training with over 10TB/s throughput and a unified data pipeline architecture.

Key Features:

- Throughput at scale: Offers up to 3.2TB/s throughput per data center rack, within an exabyte-scale namespace to accelerate AI model training.

- Unified AI data pipeline: Runs the same Purity OS across all stages of the AI lifecycle, simplifying architecture and improving efficiency from ingest to inference.

- Performance SLAs for AI: Provides the only SLA-backed performance guarantees in the industry, ensuring predictability and reducing deployment risk.

- AI-ready FlashBlade architecture: Supports large-scale, GPU-intensive workloads with a highly parallel, disaggregated architecture that simplifies management.

- Validated AI infrastructure designs: Eliminates guesswork with pre-tested, AI-ready reference architectures to speed up and de-risk implementation.

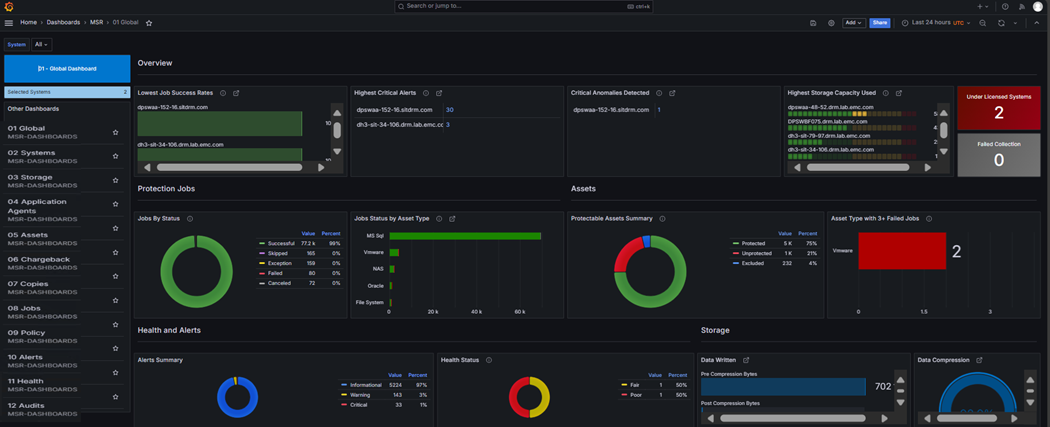

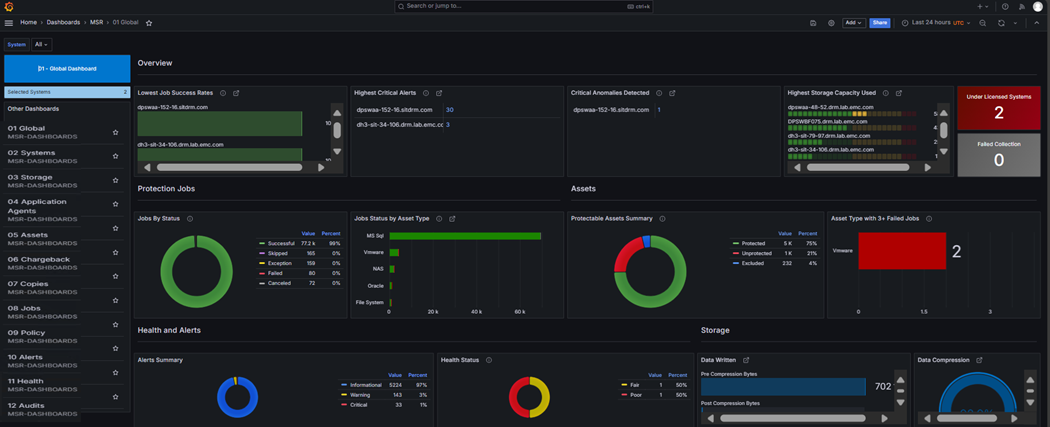

4. VAST Data

VAST Data delivers a unified data platform to accelerate the AI data pipeline from data capture and preparation to model training and serving. By eliminating the need for tiered storage systems and reducing data movement, it simplifies operations while offering large-scale performance and reliability.

Key features:

- AI data pipeline coverage: Supports all phases of AI workflows—including ingest, prep, training, and serving.

- Unified multi-protocol support: Handles unstructured and structured data using NFS, SMB, S3, and SQL-compatible engines like Spark and Trino.

- AI-optimized performance access: Offers NFS-over-RDMA and NVIDIA GPUDirect Storage access for fast data delivery.

- Data availability: Enables simultaneous high-performance access across locations using a global namespace with write consistency.

- Simplified data pipelines: Reduces the need for manual data copying by allowing AI workloads to access shared datasets concurrently.

5. Dell

Dell’s AI Data Platform delivers a scalable foundation to meet the demands of AI-driven organizations. By integrating Dell PowerScale storage with a flexible data lakehouse architecture, it enables data ingestion, processing, and protection.

Key features:

- Unified architecture with PowerScale and Data Lakehouse: Combines Dell PowerScale storage with an open lakehouse framework to manage structured and unstructured data.

- Scalable data ingestion and access: Aggregates data from distributed edge sources into persistent, GPU-optimized storage for AI workloads.

- Integrated data processing for AI: Enriches and merges structured and unstructured datasets, supporting analytics and AI model development.

- Enterprise-grade Data protection: Ensures data resilience and compliance through security, threat detection, and automated backup strategies.

- Petabyte-scale performance: Handles the throughput and storage demands of large AI models with low-latency access.

Evaluation Criteria for Selecting AI Storage Providers

Choosing the right AI storage provider is crucial to supporting scalable, high-performance AI initiatives. The selection should be guided by both technical capabilities and operational requirements. Here are key considerations:

- Performance benchmarks: Evaluate read/write throughput, IOPS, and latency under real AI workload simulations. Look for published data measured with industry-standard benchmarks. Or, conduct proofs-of-concept using carefully collected data and models to confirm performance claims.

- Scalability and flexibility: Ensure the solution can scale on both performance and capacity without major re-architecture. The ability to add nodes or storage capacity on demand is vital for growing datasets and increasing model complexity.

- Compatibility with AI tools and workflows: Confirm seamless integration with machine learning frameworks (e.g., TensorFlow, PyTorch), container platforms (Kubernetes, Docker), and orchestration tools. Native support for these tools reduces operational friction and setup time. A native S3 API ensures compatibility with the most common API used by these tools.

- Data management and automation: Prioritize systems with automated tiering, snapshotting/versioning, and lifecycle management. These features reduce manual intervention and help manage costs by optimizing storage allocation across hot, warm, and cold data.

- Multi-protocol and multi-cloud support: Look for support for NFS, SMB protocols, and native S3 API support to enable integration with diverse applications. Hybrid deployment options provide flexibility in aligning storage with compute resources.

- Security and compliance: The platform should offer encryption at rest and in transit, fine-grained access controls, and compliance with industry standards. Secure data handling is especially important in regulated industries.

- Vendor ecosystem and support: Evaluate the provider’s ecosystem of partners, certifications, and customer references. Reliable support, including 24/7 availability and proactive monitoring tools, is critical for production environments.

- Total cost of ownership (TCO): Factor in not just upfront costs but also operational expenses, scalability-related charges, and potential vendor lock-in. Transparent pricing models and flexible licensing can significantly impact long-term value.

Conclusion

As AI workloads grow in scale and complexity, the importance of purpose-built storage solutions continues to rise. An effective AI storage system must deliver high-speed access, support for diverse data types, seamless integration with AI pipelines, and data management capabilities. Selecting the right storage infrastructure is essential for optimizing model training and inference.