Here are 6 tips for getting the most from your object storage project.

By Neil Stobart

VP of WW Sales Engineering, Cloudian

(Re-post of sdx Central article)

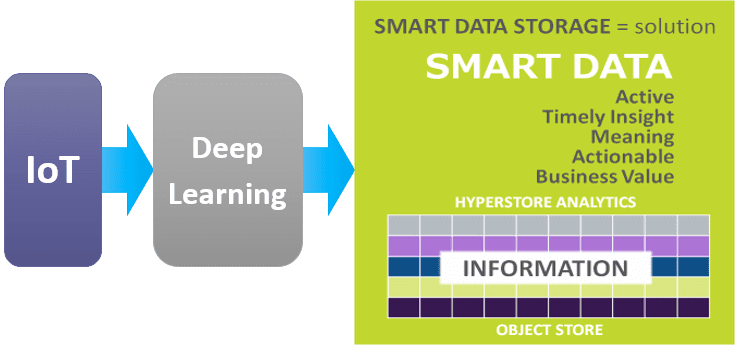

IT managers face new storage challenges as companies generate growing volumes of unstructured data. Whether it’s high-res images, backup data, or IoT-generated information, this data needs to be searchable and instantly accessible to facilitate analysis and data mining. IT professionals increasingly find that object storage addresses these challenges in a simple and cost-effective way. But, object storage is new in many data centers and presents questions about how best to manage it. Here are six best practices that will help you get the most from object storage.

IT managers face new storage challenges as companies generate growing volumes of unstructured data. Whether it’s high-res images, backup data, or IoT-generated information, this data needs to be searchable and instantly accessible to facilitate analysis and data mining. IT professionals increasingly find that object storage addresses these challenges in a simple and cost-effective way. But, object storage is new in many data centers and presents questions about how best to manage it. Here are six best practices that will help you get the most from object storage.

Best Practice No. 1

Identify workloads that make sense for object storage.

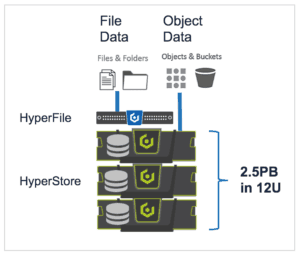

With multi-petabyte scalability, object storage is best for data-intensive applications. Consider object storage for applications that require streaming throughput (Gb/s) rather than high transaction rates (IOPs). Examples are backup, data archiving, IoT, CCTV, voice recordings, log files, and media files. As one option, consider tiered storage infrastructures that let you transparently move data from high-performance storage to object storage.

Object storage offers compelling economies for large data sets, so you can keep more data online and available on-demand. But storage is not one-size-fits-all. Review your applications and determine where object storage makes more sense than other storage types. Traditional storage arrays or All-Flash systems will continue to make sense for high IOPs\low-latency applications and for smaller data set sizes (think Oracle, SQL databases, email servers, ESX server farms, and VDI).

Best Practice No. 2

Beware of the 1PB failure domain.

High-density storage servers now offer capacities nearing 1PB in a single device. With such high storage density, these devices can be very attractive from a cost standpoint. But make sure you’ve thought through the implications of managing this much storage in a single device. Even if you’re protected from data loss, you might still be looking at a long rebuild time in the event of device failure. To reduce rebuild times, logically divide large servers into multiple independent nodes. Also, use erasure coding to build cluster configurations that are resilient to multiple device failures. That way, if you were to encounter a second failure during a rebuild, you’re still protected.

Best Practice No. 3

Use QoS and multi-tenancy to consolidate different workloads on a single platform.

A key benefit of object storage is the great scalability, which lets you simplify management by consolidating users and applications onto a single system. Within that shared environment, however, the system must deliver service levels that meet each users’ needs: they each require storage capacity, security, and predictable performance. To achieve this, make sure your system is configured with isolated storage domains plus quality-of-service controls. The combination will eliminate the two main challenges of shared storage: the nosey neighbor and the noisy neighbor problems. Your users will thank you.

A key benefit of object storage is the great scalability, which lets you simplify management by consolidating users and applications onto a single system. Within that shared environment, however, the system must deliver service levels that meet each users’ needs: they each require storage capacity, security, and predictable performance. To achieve this, make sure your system is configured with isolated storage domains plus quality-of-service controls. The combination will eliminate the two main challenges of shared storage: the nosey neighbor and the noisy neighbor problems. Your users will thank you.

Best Practice No. 4

Consider integrating data management into your application to deliver workflow automation.

The most common “language” of object storage is the S3 API — Amazon Web Services (AWS) simple storage service API — which is revolutionizing how applications can control data. To see why, compare the S3 API with traditional data management protocols such as FC, iSCSI, NFS or SMB. Those protocols only support two basic commands: read and write data. By contrast, the S3 API supports over 400 different verbs that facilitate the management, reporting, and seamless integration with public cloud services. Application owners should be aware of the possibilities built into the S3 API and work with app developers and vendors to capitalize on these advanced services.

Best Practice No. 5

Leverage metadata capabilities.

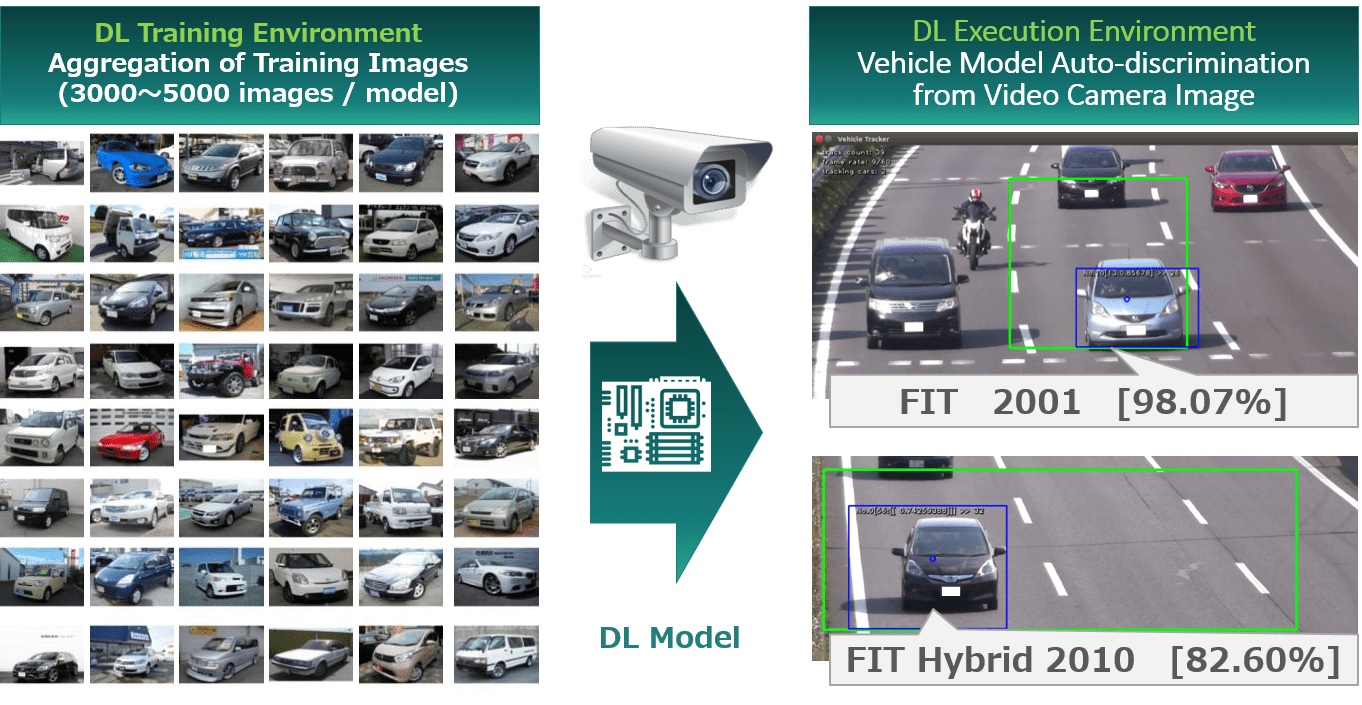

Rich metadata — or data about data — is simply a user-defined tag associated with each object. But that tag’s implications are profound. Object storage has rich metadata tag features built-in, unlike network-attached storage (NAS), which has very limited metadata, or SAN, which has none. Simple as they are, these tags will have a significant impact on data management. They can be readily searched with Google-like tools, and they can be changed over time by applications that analyze your data and extract insights, such as, “What is the name of the person in this image?” By recording that finding in a tag that’s forever connected with the data, wherever that data may be stored, business information can be found and leveraged in seconds. Imagine all data sets being searchable, across all storage pools, with a single Google-like search query.

Application owners should consider their opportunities. Can your data be described in ways that make it more searchable? Could you potentially use tools — either on-prem or in the cloud — to enrich your metadata, thereby adding value to your search process? If so, consider ways to capitalize on the power of metadata.

Best Practice No. 6

Conduct a Proof-of-Concept.

Not all object storage platforms are created equal, and some careful analysis is required to make sure your needs are met. A simple way to eliminate risk is by conducting a proof-of-concept. Document your requirements and share them with your vendor. Undertake what testing is needed to validate both your needs and the vendor’s claims. In many cases, this can be completed quickly and non-disruptively using virtual machines as the test platform. The knowledge you will gain – about your needs, the product, and the vendor’s capabilities – will ensure your project’s success.

Learn more at www.cloudian.com.

This is part of a series of articles about object storage.

YOU MAY ALSO BE INTERESTED IN: