“We are on the cusp of a global pandemic,” said Christopher Krebs, the first director of the Cybersecurity and Infrastructure Security Agency(CISA), told Congress in May of 2021. The director of CISA isn’t talking about a virus created pandemic, rather he is referring to the pandemic of cyber-attacks and data breaches. This warning rang especially true when the Colonial Pipeline ransomware attack crippled the US energy sector the following week.

For the uninitiated, ransomware is the fastest growing malware threat, targeting users and organizations of all types. It works by encrypting the user’s data, rendering the source data and backup data useless and asks for ransom, threatening to hold the data hostage until it is received. Payments are usually demanded in untraceable crypto currencies which can (and in many cases do) end up with state sponsored bad actors.

Today, protection against and mitigation for a ransomware attack are information technology and information security responsibilities with the C-Suite and Board taking a relatively hands-off approach. But that must change and in some cases is already changing. Here’s why C-Suite and Board members should take this threat seriously and be the driving force to protect the organization against ransomware.

1. To Pay or not to Pay: Financial Impacts of Ransomware

Ransomware impacts organizations of all sizes, across all industries. The security company Sophos(1) found that 51% of the companies responded in an affirmative when asked if they were attacked by ransomware in 2020 – the year of the pandemic. In 73% of those cases, data was successfully encrypted, thereby bringing the business to its knees. More than a quarter of the respondents (26%) admitted to paying the ransom at an average of $761K/ incident, which is a huge increase from the previous years where a similar report had pegged the average at $133K

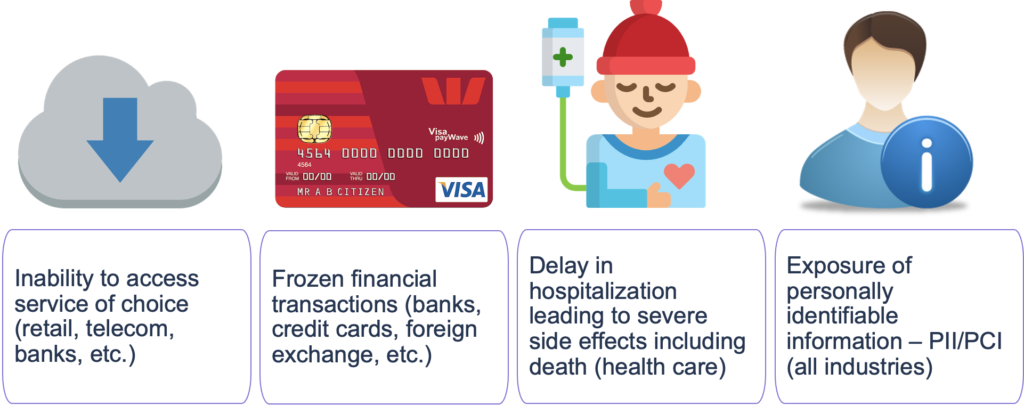

The financial implication of paying the ever-increasing ransom demands aside, the real impact of ransomware is on the business itself. It cripples businesses and renders services ineffective and undeliverable. There is also the threat of data exfiltration which can expose sensitive customer data and leave the organization open to lawsuits and additional financial penalties. This does not even account for the loss of business due to downtime, or the brand damage that the ransomware can cause.

With just these impacts alone, with rope in the Director of IT or IS, CFO, General Counsel, Public Relations, Chief Privacy Officer, CIO, and CISO. The CEO will also be roped in and will have to break the new to her board of directors. It would be far better if she remembers this as the day she was able to say, “We were prepared. We already have the business back up and running. We will not be paying.”

2. The Moral (and Regulatory) Low Ground of Paying a Ransom

Then there is the moral and regulatory dilemma associated with paying off ransom. This practice is actively discouraged by the US governmental agencies as it encourages and fosters similar and copycat attacks. Added to this is the Oct 2020 advisory from Department of The Treasury(2), OFAC (Office of Foreign Assets Control) & FINCEN (Financial Crimes Enforcement Network) which talks about “Potential Sanctions Risks for Facilitating Ransomware Payments”. Given that most of the payments for ransomware are untraceable, this opens organizations, the executives and board members to US government sanctions violations.

3. Cyber Insurance: How to Get, Keep, and Save on This Must-Have for Business Continuity

Cyber Security Insurance, the fastest growing insurance segment is another important consideration. As a safeguard most large organizations require cyber insurances as part of their cyber defense strategy. But insurance companies are not immune to the US sanctions violation if a payment is made to rogue nations. Therefore, premiums for ransomware coverage are high or may require up to 50% coinsurance. In some cases, insurers may NOT even cover businesses unless they are able to show significant cyber security arrangements along with data immutability as part of their cyber security plans.

4. The Human Cost of Ransomware

Finally in addition to a business, insurance and regulatory impact, the most reprehensible danger of ransomware is its human impact. This applies across all industries. From impacting critical utilities in the energy sector, declined credit card and bank transactions in the financial sector, to delayed patient care, emergency treatments, and even death in the healthcare sector, the impact of ransomware is real and direct and all too inhumane.

5. Getting Organized: Plan, Don’t Pay

Without a regularly drilled, top-down plan on how a business will respond to a ransomware attack, an organization is going to make mistakes in the heat of an attack. It will pay the costs of those mistakes whether to masked malware attackers, through ransomware-induced PR nightmares, or via increased cyber insurance premiums levied for lack of proper preparation and protection. It is not just the responsibility of the IT/IS department to keep the business safe, but the obligation of every CXO and Board member to ask for and implement stringent cyber security measures starting with zero trust, perimeter security, and employee training. But don’t forget to protect the attackers ultimate prize–your backup data—in immutable WORM storage.

For all these reasons, ransomware MUST be a C-suite and Board-led conversation. Forrester analysts write: “Implementing an immutable file system with underlying WORM storage will make the system watertight from a ransomware protection perspective.” Data immutability through WORM features such as S3 Object Lock is also now a requirement for many cyber insurance policies to cover the threat of ransomware.

To learn more about solutions for ransomware protection, please visit

https://cloudian.com/lp/lock-ransomware-out-keep-data-safe-ent/

Citation:

- https://www.sophos.com/en-us/medialibrary/Gated-Assets/white-papers/sophos-the-state-of-ransomware-2020-wp.pdf

- https://home.treasury.gov/system/files/126/ofac_ransomware_advisory_10012020_1.pdf

Amit Rawlani, Director of Solutions & Technology Alliances, Cloudian

Amit Rawlani, Director of Solutions & Technology Alliances, Cloudian